Touch Support

The WinCC OA UI supports touch controls parallel to mouse and keyboard control at runtime.

Notes and Requirements

-

The UI is set up for touch controls (onscreen keyboard, scroll bars are not shown) by using either the UI manager option "-touch" or the config entry "usesTouchScreen = 1" in the [UI] section. The -touch parameter has to be set to use the virtual keyboard.

-

In touch mode, scroll bars are not shown for scrollable widgets (Table, SelectionList, Tree, DpTypeView, DpTreeView, Module(the area where the panel is shown)) anymore since the widgets can be operated by using a pan gesture.

-

To prevent conflicts between the settings of windows and the WinCC OA settings, you have to configure the touch settings of windows. Therefore, go to Control Panel - Pen and Touch. In the Touch tab you have to select Press and hold and click on Settings. There is a checkbox named "Enable press and hold for right clicking" that must be disabled.

Touch operation

The following gestures are available when using the touch mode:

Left mouse click

A left mouse click is performed by tapping on the desired spot of the panel.

Double click

To perform a double click two fast taps on the same spot must be used.

Right mouse press / Long tap

To trigger a right mouse click event (RightMousePressed) a normal click must be performed but instead of releasing the finger it must remain for 700 milliseconds.

Pinching

To zoom a panel with two fingers (=pinching) the two fingers must be pressed on the display and then brought together or spread wider.

Panning

Moving (=panning) the panel is done by touching the display with one finger and then moving the finger.

Swipe

A swipe gesture with 1 or 2 fingers in any direction triggers the GestureTriggered event which is available for panels and embedded modules. This gesture is only working if the panel is not bigger than the displayed area. In this case panning is executed and the GesturedTriggered event is not triggered. Refer to Properties and events of the panel for a detailed description on the GesturedTriggered event.

To define the distance between the fingers for a swipe gesture the config entry touchDetectionDistance can be used.

The 2 finger swipe gestures are not supported on GNOME desktops and the ULC UX.

Scrollbars

To make the handling easier, scrollbars are displayed bigger in the touch mode.

Generally all changes to widgets in the touch mode are stored in the "touchscreen.css" file under <wincc_oa_path>/config. Therefore it's possible to define custom settings

Two hand operation

The two hand operation can be configured in order to prevent the commands which were executed by touching the monitor unintentionally.

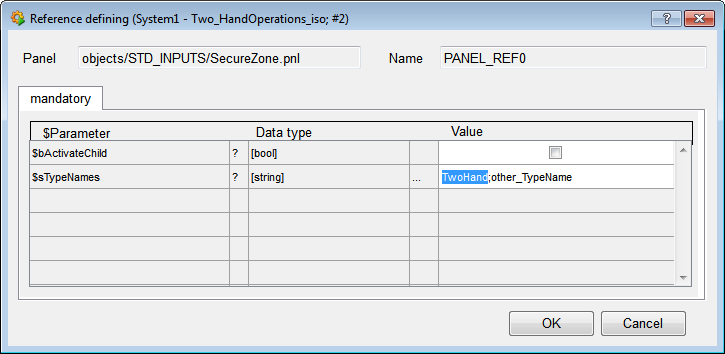

The button allows to disable all objects with a defined type name. If the two hand button "TwoHand_op" (in STD_Inputs) is inserted into the panel by drag & drop the following dialog window is opened.

If you enable the checkbox "$bActivateChild" touch gestures can be also used in childpanels.

Generally, touch gestures across different windows are not possible with 2 root panels. However, embedded modules can be used with the two hand operation.

Two hand operation is supported on windows, if the opening of the child-panel has been properly synchronized (see twoHands_op.pnl initialization), e.g. with a delay in the "Initialize" script or later from the "Clicked" script in the main panel.

The type names of all objects which shall respond to the two hand operation must be passed to the $-parameter "$sTypeNames". If you want to type in more names, the entries must be separated by a semicolon (;).

All shapes that have an appropriate type name are locked for operation which means they are disabled during the initialize. The objects can only be enabled by pressing and holding the two hand button. Therefore they can not be pressed unintentionally.

The following shapes support the two hand operation:

- Checkbox

- Label

- Push button

- Radio box

- Slider widget

- Spin box

- Textfield

- TextEdit

- Toggle button EWO

Events

Mouse / touch events

The UI can be operated parallel with mouse and touch gestures. If a touch gesture triggers an event, it is mapped to the corresponding mouse event. This applies to the following events:

MousePressed, MouseReleased, RightMousePressed, Clicked, DoubleClicked

To differentiate if an event is triggered via mouse or touch, additional elements are passed in the mapping of the respective event's main function main(mapping event). The boolean flag isSynthesized==TRUE is used if a event is triggered by a touch gesture.

| Mapping-Elemente | Beschreibung |

| buttons |

MOUSE_LEFT MOUSE_RIGHT MOUSE_MIDDLE Represents the button states when the event was triggered. The "buttons" state is an OR combination of the MOUSE_* elements (=CTRL constants) above. In contrast to MouseReleased, the button which triggered the event is also considered in case of the MousePressed and DoubleClicked events. |

| buttonsAsString |

“LeftButton” “RightButton” “MiddleButton” The names of the buttons that are pressed while triggering the event. If multiple buttons are pressed they are returned as one string divided by the "|" character. |

| button | The state of the button which triggered the event. |

| buttonAsString |

“LeftButton” “RightButton” “MiddleButton” Name of the button which triggered the event. |

| modifiersAsString |

”ShiftModifier” ”ControlModifier” ”AltModifier” “MetaModifier” The Names of the modifier keys that are pressed while triggering the event. If multiple keys are pressed they are returned as one string divided by the "|" character. |

| localPos | X,Y mapping of the relative mouse position on the panel. |

| globalPos | X,Y mapping of the mouse position on the screen globally. |

| modifiers |

KEY_SHIFT KEY_CONTROL KEY_ALT KEY_META Represents the keyboard modifier states when the event was triggered. The "modifiers" state is an OR combination of the CTRL constants above. |

| isSynthesized | Returns TRUE if the event is triggered by a "touch" event and FALSE if it is triggered by mouse. |

How to use touch controls

All primitive shapes use the events MousePressed, MouseReleased, RightMousePressed, Clicked and DoubleClicked. Additionally each primitive shape (as well as the supported widget types) has the read-only property “armed” which is true as long as the object is pressed with the finger and the finger is located above the shape.

Example

Example

For the implementation of touch control where an object must be pressed while another object has to start an action, the clicked respectively the pressed event of the second object must check the “armed” state of the first object.

On-Screen Keyboard

WinCC OA provides a custom on-screen keyboard when using -touch. The keyboard is automatically displayed if a field is selected by the user that can be edited. The keyboard is displayed in front of the UI. The available languages are German, English and Russian.

The virtual on-screen keyboard is not used for the Mobile UI Application.

Additional details

The on-screen keyboard is loaded if the environment variable QT_IM_MODULE is either not set or set explicitly set to "WinCC_OA_VirtualKeyboard". In case the environment variable is set accordingly the UI manager will internally load the script "virtualKeyboard.ctl". Internally the script uses the callback functions "show()" and "hide()" which are automatically called by the UI manager if a keyboard should be displayed or hidden.

To completely disable the on-screen keyboard panel and always use the on-screen keyboard provided by the system the environment variable QT_IM_MODULE must be set to "WinCC_OA_VirtualKeyboard:nopanel".

To display the keyboard by default the panel "virtualKeyboard.pnl" is opened within its parent module "_VirtualKeyboard_". This module is automatically created by the UI manager.

As the UI manager can only open a panel after reading the config files of a project the Desktop UI Project Selection cannot use this panel, therefore the system default on-screen keyboard is used. For Windows the TapTip.exe is used whereas Linux uses the xvkbd (and as fallback the kvkbd).

Restrictions

Following restrictions must be considered when using the WinCC OA touch feature

- The GEDI does not support touch controls

- The trend widget does not support rulers in touch mode. Additionally touch interaction with the trend axis is not available.

- Panning or pinching cannot be started on objects that have dedicated touch implementation. These objects are the WinCC OA Trend or custom EWOs with corresponding touch implementation.

- Multi-touch controls for using multiple objects simultaneously are restricted to the following combinations:

-

- Widget / widget

- Primitive shape / primitive shape

The combination widget / primitive shape is NOT working!

- Widgets that support multi-touch controls are restricted to:

-

- Pushbutton

- Slider widget

- Thumbwheel

- Toggleswitch

- There are the following restrictions for events in touch mode:

| Event | Non-supported widgets |

| DoubleClicked | Bar trend, Selectionlist, Table, TextEdit, TreeView, DP-TreeView |

| KeyboardFocusIn | Primitive objects, Selection List, Tab, Bar Trend, Clock |

| KeyboardFocusOut | Primitive objects, Selection list, Tab, Bar Trend, Clock, DP TreeView |

| MouseOver | Tab |

| RightMousePressed | Bar Trend, Selectionlist, Table, TextEdit, TreeView, DP-TreeView |

| SelectionChanged | Primitive objects, Table |

Touch Support for ULC UX

Chrome/Firefox: Touch Support works for both browsers.

Microsoft Edge:

Currently the Touch Support in Microsoft Edge is only experimental.

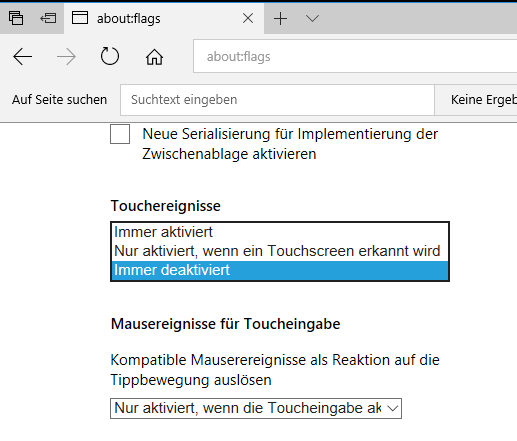

In Microsoft Edge the touch events are deactivated by default. The touch events can be activated in the settings of Microsoft Edge:

Open the settings via about:flags of the URL. Under the options "Enable touch events" you can find three options:

- Always On

- Only on when a touchscreen is detected

- Always off (Standard)

IE11:Currently there is no touch support in IE11.