System planning

WinCC OA allows to implement video management systems of different sizes, e.g. small systems with few cameras or large distributed systems with a lot of peripheral devices that can be integrated in superordinated systems via several interfaces. Depending on the requirements the following must be considered:

-

Choosing an adequate CPU

-

Defining the number of required write and read operations

-

Single or redundant configuration servers

-

Number of streaming servers

-

Type and capacity of storing systems

-

Number of interface computers

-

Number of workstations

-

Which software components are required

This chapter shall provide assistance with system planning, however, because of many different requirements it is not possible to give generally valid statements for all projects.

Choosing an adequate CPU

Simultaneously processing, decoding and displaying video data of several cameras with high quality and high resolution is very demanding for the used processor. Therefore the processor is a crucial factor for the system performance. Principally it is possible to run WinCC OA video with all current CPUs (from Intel Atom to i7). However, to get optimal results the used CPU shall support the processor supplementary instruction set SSE2. High-end processors are necessary if many video streams must be decoded and displayed simultaneously.

Example

Example

Recording 52 channels with 25fps@3Mbps has resulted in 30 - 50% CPU load on the following system:

-

CPU: Intel Xeon E5620 4C/8T 2.40 GHz 12 MB

-

RAM: 8 GB

-

Video mass memory: 64 TByte, 400 Mbps writing in continuous operation (Realtime-Traffic), <100 Mbps reading

-

Network: Gigabit Ethernet

-

Operating system: Win Server x64

It is also possible to use similar or better high-end processors. Note that not only processor type or clock rate but also the CPU benchmarks are significant when selecting a CPU.

Calculating the RAM

Only uncompressed data in form of color information per pixel can be passed to a graphics adapter. Therefore, the data which is compressed by the sender must be decoded after it is transmitted to a receiver. Consider how the video data was digitalized by the sender in order to calculate the RAM required by the receiver to display the video.

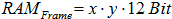

Used color modelWinCC OA video allows to decode video images according to the standards M-JPEG, H.264 and MPEG 4 used by current encoders. All of these standards generally use the YUV 4:2:0 (YV 12) color model which needs only 12 bits for saving color information of a pixel.

The WinCC OA video decoder needs enough RAM to save the decoded video images in the YUV 4:2:0 format. The YUV data is written to the corresponding memory of the graphics adapter as soon as it is provided by the video decoder. The graphics adapter converts the data to the RGB color space and displays each pixel with the appropriate color value.

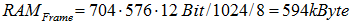

Considering the picture resolution (x*y pixel) of the coded video image, the memory required for all pixels can be calculated as follows:

In addition to the memory needed for the pixels of a video image the following details must be considered in order to calculate the required RAM:

Memory pools

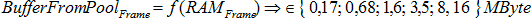

WinCC OA video uses several memory pools. Since creating and deallocating memory strongly effects the performance, memory pools with fixed-size blocks are created. The WinCC OA video decoder gets the required memory from these pools. Fixed-size blocks are defined for the common picture resolutions (CIF, 4CIF, Half HD, Full HD) and the appropriate memory is created once.

If the video decoder requires a specific memory size, instead of the exact memory size it gets the memory block which is next in size.

WinCC OA video provides one memory pool for each of the up to date resolutions:

| Resolution | Pool size | Format |

|---|---|---|

| 352 x 288 Pixel | 176.128 Byte = 172 kByte | CIF |

| 720 x 576 Pixel | 716.800 Byte = 700 kByte | 4CIF, PAL |

| 1280 x 720 Pixel | 1.593.344 Byte = ca. 1,6 MByte | Half HD (HD ready) |

| 1920 x 1080 Pixel | 3.579.904 Byte = ca. 3,5 Mbyte | Full HD |

| > 1920 x 1080 Pixel | 8 MByte, 16 MByte | > Full HD |

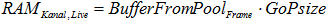

The size of the returned memory in which an image (frame) is internally saved can be expressed as follows:

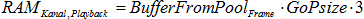

GoP length

Video compression usually combines successive images (frames) to groups of interdependent images and compresses and encodes these groups. These groups of successive images are called Group Of Pictures (GoP). Some images are coded as reference images (I frame) others as difference images (P and B frames). The GoP size or length defines the distance between two I frames. This value influences the RAM needed by the receiver. WinCC OA video supports playback with frame by frame positioning (forward and backward) even on positions where only a difference image exists. To enable this positioning the video decoder must store three complete GoPs in the memory which means that the memory must be allocated for each decoded frame of those three GoPs,

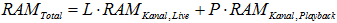

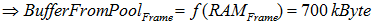

In case of CCTV usual GoP lengths are about 25 to 30 frames. Because of the described buffer storage of GoPs, WinCC OA limits the GoP lengths to 100 frames. The memory required for a playback channel can be calculated as follows:

When displaying a live stream no more than one GoP is stored. Therefore less RAM is required:

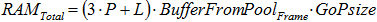

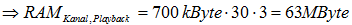

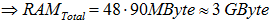

Overall RAM needed for simultaneously displaying L live streams and P playback channels with a fixed resolution is as follows:

Increasing the GoP length results in a reduction of storage volume and transmission bandwidth because fewer full images must be transmitted. However it also means that the receiver will need more RAM.

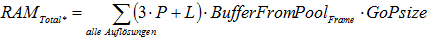

Consider that the previous formula only applies when all cameras are using the same resolution. If different resolutions are used, the pool memory is not deallocated. This means that the previous formula must be applied to each of the used resolutions. The total RAM required is the sum of all results.

Example

Example

In this example the necessary RAM is calculated using the following values.

-

The receiver shall display 48 video channels simultaneously (live and playback channels are arbitrarily used)

-

Resolution of the video cameras is 4CIF (704 × 576 Pixels)

-

Used GoP length is 30

In the worst case only playback connections are used. Therefore the following RAM is necessary:

Calculating storage capacity for the streaming server

This section describes the calculation of the required volume for storing video data. The following must be considered:

-

Is the recording continuous or event based?

-

Is the data recorded in a ring (old data shall be deleted if the storage capacity is exhausted)?

-

Over which period of time shall be recorded?

-

In case of permanent recording: How many hours must the data be stored? How many hours must be recorded per camera each day?

-

In case of event based recording: How many hours must the data be stored? How many hours must be recorded per camera each day?

-

Which resolution is necessary (CIF, 4CIF, Half-HD, Full-HD)?

-

Which frame rate (fps) is needed normally and in case of an event (5, 10, 25 fps)?

-

Is a downtime of the storage system allowed? If yes, how long? This decides whether buffering is necessary which increases the required RAM or buffer.

EXAMPLE

EXAMPLE

In the following example the data volume is calculated using the following parameters:

-

Number of cameras: 35

-

Video data is going to be stored for 96 hours (4 days). Each camera records about 22 hours per day.

-

The videos of event based recording shall be stored for up to 336 hours (14 days). Each camera records about 2 hours per day.

-

Image quality: 4CIF (704 × 576 Pixel)

-

Frame rate: 4 fps normally and 25fps in case of event based recording

To calculate the data volume the following practical values regarding frame rate and image quality have to be considered:

-

4CIF@4fps is equivalent to a data rate of 250 - 440 kbit/s per camera

-

4CIF@25fps is equivalent to a data rate of 1500 - 2700 kbit/s per camera

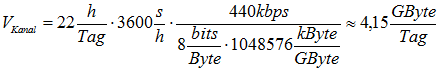

The highest data rate must be used to calculate the required data volume for the recording. The digital continuous recording of each camera results in a data volume of about 4.15 GB per day. The calculation is as follows:

For 35 cameras and a maximum storage time of 4 days the following data volume is calculated:

4.15 * 4 * 35 = 600GByte

The digital event based recording of each camera results in a data volume of 2.32 GB per day as it is calculated below.

For 35 cameras and a maximum storage time of 14 days the following data volume is calculated:

1.136 GB

Therefore a data volume of 1.136 GByte + 600 GByte = 1,7 TByte is necessary for the storage system defined in this example.

Requirements for the used recording system

If many video streams shall be recorded simultaneously in a WinCC OA video system, it is important to consider the performance requirements for the used recording systems. The following parameters must be considered:

-

Sum of write and read operations per second (input/output operations per second = IOPS

-

Average transfer rate (in MBit/s)

Read and write operations per second

The GoP length influences the requirements for the recording system. To calculate the read and write operations during recording via the WinCC OA video streaming server the following must be considered:

Three accesses must be considered in case of a write access for saving streaming data:

-

1 write access to save binary data (the GOP)

-

1 write access to save the WinCC OA video index data base

-

1 reserve

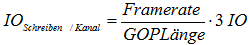

Considering the GoP length and the frame rate results in the number of accesses per channel.

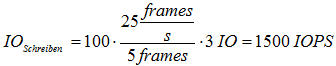

For 100 channels, a GoP length of 5 frames and a frame rate of 5 fps the number of accesses is calculated as follows:

In case of a frame rate of 25 fps:

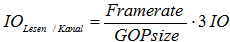

The same number of accesses can be assumed for a read access which results in the following formula:

Transfer rate

The IO load on the network adapter of the computer components must be considered during the planning phase as well. The following values must be considered for the data transfer per RTP:

-

About 70 PPS (packets per second) must be transmitted for a video stream with PAL resolution, 5 fps and H.264.

-

About 350 PPS must be transmitted for a video stream with PAL resolution, 25 fps and H.264.

Non-functional requirements

Non-functional requirements define the specific properties a system needs. Since digital video processing (coding, decoding, displaying and storing video data with high quality and frame rate) is very demanding even on modern hardware platforms with multiple cores, many factors must be considered to fulfill non functional requirements like

-

reliability

-

operability

-

capacity of used hardware

-

availability

-

performance, etc.

Based on experience the following factors must be considered for calculating video streams in such systems:

-

A video stream with a resolution of 756x576 pixel (about 4CIF) and a frame rate of 25 fps coded in H.265 format needs up to 3Mbps bandwidth for its stream profile.

-

A Full HD streams (1920x1080 pixels) means the triple amount of data, triple storage space and up to the fivefold performance requirement.

System performance

A WinCC OA video system represents a system distributed via network. Therefore, network and PC components are important factors regarding the system performance.

Network:

Video streaming results in higher requirements for a network. A video stream as described above needs about 0.1 - 3 Mbps depending on variation of image content and stream profiles. In case of video systems with 100 cameras, a data rate of 300 Mbps must be considered in the worst case.

If more than 50 video streams shall be processed on one computer, the computer must be connected to the network via gigabit.

You have to consider that the traffic of other applications may be affected by the video streaming on some network nodes.

Depending on the requirements WinCC OA video can transmit streams via unicast or multicast.

The number of different sources that shall be displayed at the work and display stations are relevant for the network layout. A WinCC OA video receiver (client) can define whether the data shall be received via multicast or unicast.

WinCC OA video supports network planning with maximum flexibility. A WinCC OA video receiver (client) states whether the data shall be received via multicast or unicast. Therefore e.g. TV channels can be distributed efficiently via multicast whereas CCTV channels are transmitted to the receiver via unicast.

-

Multicast complicates the control of data protection requirements (moreover the current devices do not support the SRTP protocol (Secure Real-Time Transport Protocol))

-

Multicast transmissions require layer 3 network components for the multicast query

-

Multicast snooping is required for the layer 3 components

Consider the following information for multicast transmissions:

Depending on the operational safety requirements it may be necessary to operate a video network independent from a data network. Normally a VLAN segmentation (separating networks on one hardware) with appropriate prioritization in order to prevent side effects is sufficient.

Hard disk:

There is an increased IO load on computers which are processing video data. However, normally this does not result in side effects on other applications.

Mass storages of streaming servers which are recording and displaying are exposed to increased loads.

The streaming performance of a streaming server depends on the data throughput of the used mass storage. A maximum streaming performance of about 20 streams (e.g. 19 recording and 1 playback stream) can be assumed for a standard hard disk.

RAID arrays can help to achieve better streaming performance. The streaming performance can be increased by using SSD hard disks, especially if many video channels shall be recorded on the system hard disk of the computer.

| Hard disk type | Maximum number of streams |

|---|---|

| Standard hard disk | about 20 streams (e.g. 19 recording streams and 1 playback stream) |

| RAID5-System with 8 hard disks | about 75 streams |

| RAID0-System with 8 hard disks | about 150 streams |

CPU:

The CPU of a computer that decodes videos is more occupied. The performance limit for modern CPUs with 8 cores (Stand 2011) for decoding is at 64 video streams (with full frame rate and resolution).

RAM:

If a computer shall be able to decode and display up to 64 video streams at the same time the provided RAM (at least 8 GByte) and graphics adapter are very important.

The memory requirements depend on the displayed streams. Playback scenarios with many playback channels need more memory than live scenarios. Moreover the used intra frame interval of an intra frame encoding (e.g. MPEG2/4, H.264) also results in an increased memory demand. A Computer which is only displaying live videos needs less RAM. E.g. 8 GByte RAM is sufficient for 64 live video streams.

Due to the increased memory requirement for video decoding, multi channel scenarios (displaying more than 24 video streams at the same time) are only possible with a 64bit operating system.

There are no significant differences between Windows and Linux. In case of Linux, NVIDIA graphics adapter can be recommended, since these adapters supports enough XVideo ports for Linux.

Operability

The operability is ensured as long as the performance limit of a computer is not reached. To prevent this, it may be necessary to limit the number of simultaneously displayed video channels. Video streams shall only be displayed on processors with more than one core. The decoding performance limit of modern 64bit processors with 8 cores is at about 64 video streams with full frame rate and resolution.

Division of processes to other computers

For up to 64 video streams all services like video integration, video recording and video display can be operated on one powerful computer (e.g. i7 processor with 8 cores, 16 GByte RAM, NVIDIA graphics adapter).

If the performance of a computer is not sufficient the processes must be distributed. Therefore the recording and the interface are moved to separate streaming servers. A separate streaming server can record about 75 video streams (4CIF@25 fps) with RAID level 5 and 150 streams with RAID level 0. If the network is scaled appropriately the streaming performance of a system can be scaled by multiplying the servers.

A full system separation is only necessary if the requirements of the video systems are too much for the data network